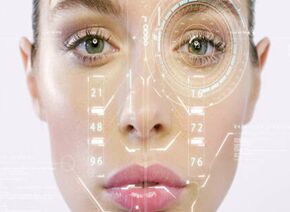

谷歌图像识别AI将不再识别人物性别

|

Google has announced that its image recognition AI will no longer identify people in images as a man or a woman. The change was revealed in an email to developers who use the company's Cloud Vision API that makes it easy for apps and services to identify objects in images. In the email, Google said it wasn't possible to detect a person's true gender based simply on the clothes they were wearing. But Google also said that they were dropping gender labels for another reason: they could create or reinforce biases. From the email:

Given that a person's gender cannot be inferred by appearance, we have decided to remove these labels in order to align with the Artificial Intelligence Principles at Google, specifically Principle #2: Avoid creating or reinforcing unfair bias. As for what Google's second AI principle states, it says: AI algorithms and datasets can reflect, reinforce, or reduce unfair biases. We recognize that distinguishing fair from unfair biases is not always simple, and differs across cultures and societies. We will seek to avoid unjust impacts on people, particularly those related to sensitive characteristics such as race, ethnicity, gender, nationality, income, sexual orientation, ability, and political or religious belief. Instead of labeling someone a man or woman in an image, that individual will now just be classified as a "person" by Google's Cloud Vision API image recognition tools. The move should be a welcome step in the right direction for those who have long pointed out that Google and other tech companies' systems have an issue correctly categorizing people who identify as trans. Per Business Insider's testing, it appears that the labeling changes have already taken place, so developers who use the API should see the changes immediately. |